JAIS 30b Chat

Version: 3

Models from Microsoft, Partners, and Community

1P and 3P MaaS models are a select portfolio of curated models both general-purpose and niche models across diverse scenarios developed by Microsoft teams, partners, and community contributors- Managed by Microsoft: Purchase and manage models directly through Azure with a single license, world class support and enterprise grade Azure infrastructure

- Validated by providers: Each model is validated and maintained by its respective provider, with Azure offering integration and deployment guidance.

- Innovation and agility: Combines Microsoft research models with rapid, community-driven advancements.

- Seamless Azure integration: Standard Azure AI Foundry experience, with support managed by the model provider.

- Flexible deployment: Deployable as Managed Compute or Serverless API, based on provider preference.

Key capabilities

About this model

JAIS 30b Chat from Core42 is an auto-regressive bi-lingual LLM for Arabic & English with state-of-the-art capabilities in Arabic.Key model capabilities

Core42 conducted a comprehensive evaluation of Jais-30b-chat and benchmarked it against other leading base and instruction finetuned language models, focusing on both English and Arabic. The evaluation criteria span various dimensions, including:- Knowledge: How well the model answers factual questions.

- Reasoning: The model's ability to answer questions that require reasoning.

- Misinformation/Bias: Assessment of the model's susceptibility to generating false or misleading information, and its neutrality.

Use cases

See Responsible AI for additional considerations for responsible use.Key use cases

The model is trained as an AI assistant for Arabic and English speakers.Out of scope use cases

The model is limited to producing responses for queries in these two languages and may not produce appropriate responses to other language queries. By using JAIS, you acknowledge and accept that, as with any large language model, it may generate incorrect, misleading and/or offensive information or content. The content generated by JAIS is not intended as advice and should not be relied upon in any way, nor are we responsible for any of the content or consequences resulting from its use.Pricing

Pricing is based on a number of factors, including deployment type and tokens used. See pricing details here.Technical specs

The provider has not supplied this information.Training cut-off date

The pretraining data has a cutoff of December 2022, with some tuning data being more recent, up to October 2023.Training time

The provider has not supplied this information.Input formats

The provider has not supplied this information.Output formats

The provider has not supplied this information.Supported languages

Arabic & EnglishSample JSON response

The provider has not supplied this information.Model architecture

The model is based on transformer-based decoder-only (GPT-3) architecture and uses SwiGLU non-linearity. It uses LiBi position embeddings, enabling the model to extrapolate to long sequence lengths, providing improved context length handling. The tuned versions use supervised fine-tuning (SFT).Long context

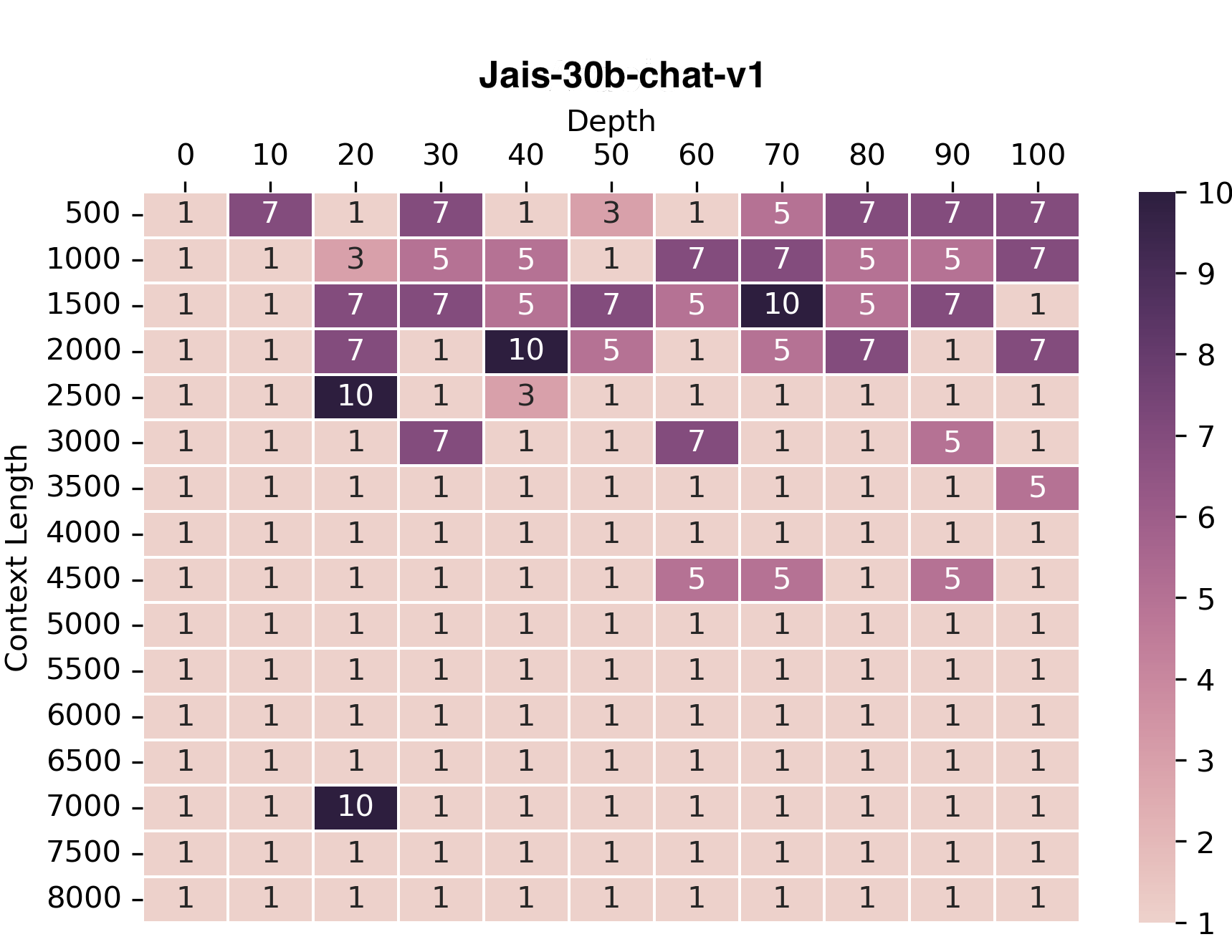

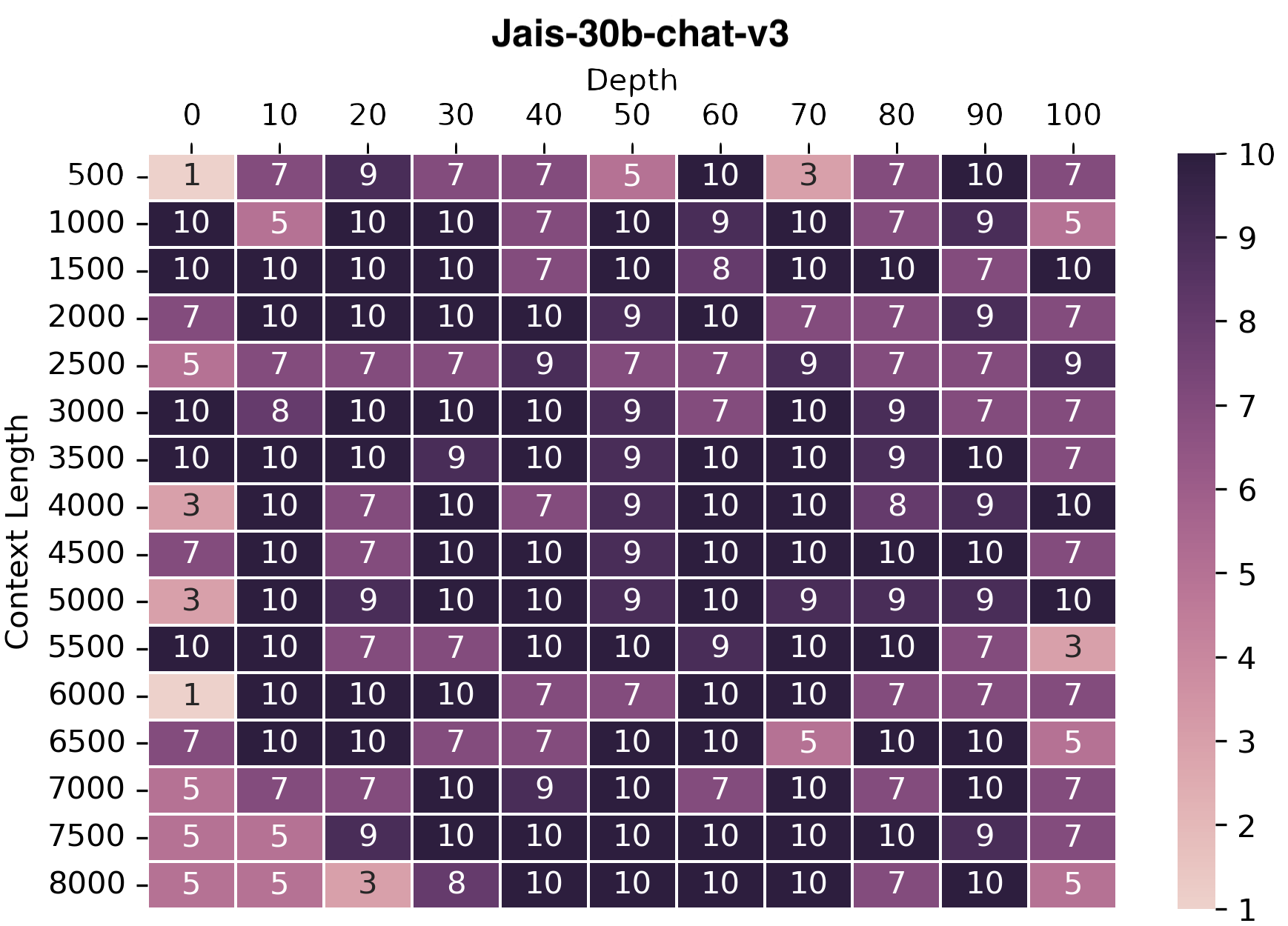

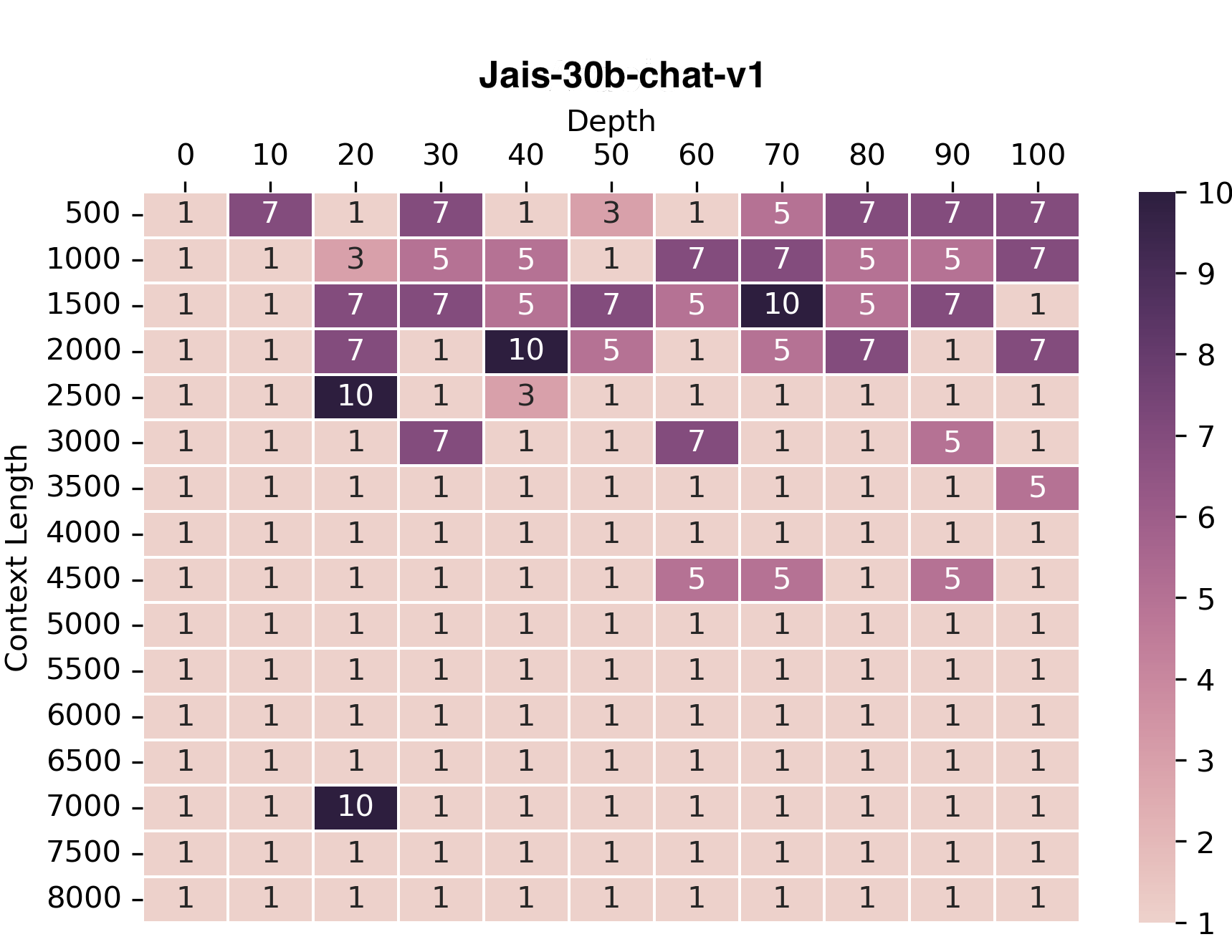

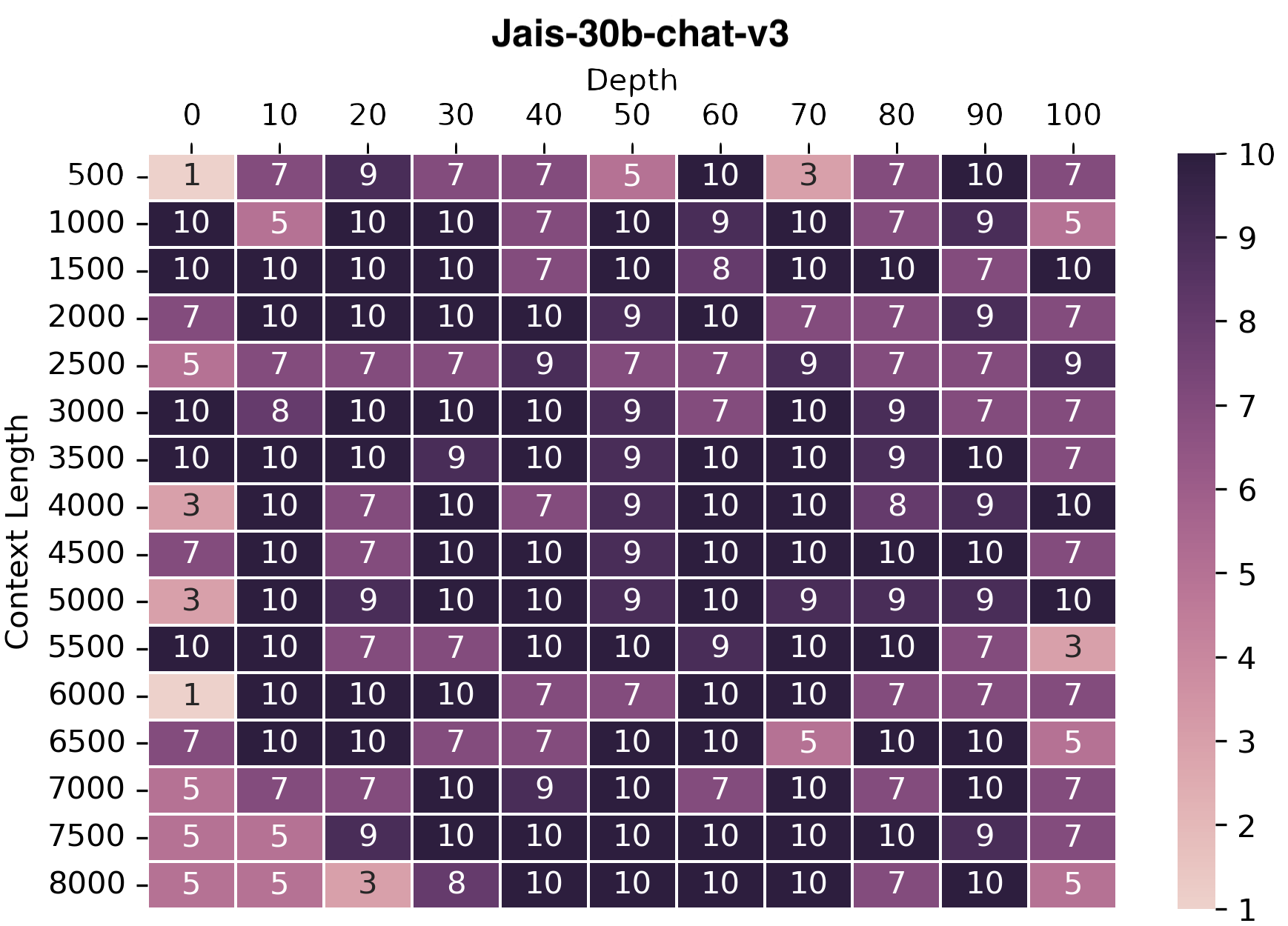

We adopted the needle-in-haystack approach to assess the model's capability of handling long contexts. In thisevaluation setup, we input a lengthy irrelevant text (the haystack) along with a required fact to answer a question (the

needle), which is embedded within this text. The model's task is to answer the question by locating and extracting the

needle from the text. We plot the accuracies of the model at retrieving the needle from the given context. We conducted evaluations for both Arabic and English languages. For brevity, we are presenting the plot for Arabic only. We observe that jais-30b-chat-v3 is improved over jais-30b-chat-v1 as it can answer the question upto 8k context

lengths.

Optimizing model performance

The provider has not supplied this information.Additional assets

The provider has not supplied this information.Training disclosure

Training, testing and validation

The pretraining data for Jais-30b is a total of 1.63 T tokens consisting of English, Arabic, and code. Jais-30b-chat model is finetuned with both Arabic and English prompt-response pairs. We extended our finetuning datasets used for jais-13b-chat which included a wide range of instructional data across various domains. We cover a wide range of common tasks including question answering, code generation, and reasoning over textual content. To enhance performance in Arabic, we developed an in-house Arabic dataset as well as translating some open-source English instructions into Arabic.Distribution

Distribution channels

The provider has not supplied this information.More information

Source: Core42Responsible AI considerations

Safety techniques

The model is trained on publicly available data which was in part curated by Inception. We have employed different techniques to reduce bias in the model. While efforts have been made to minimize biases, it is likely that the model, as with all LLM models, will exhibit some bias.Safety evaluations

Core42 conducted a comprehensive evaluation of Jais-30b-chat and benchmarked it against other leading base and instruction finetuned language models, focusing on both English and Arabic. The evaluation criteria span various dimensions, including:- Knowledge: How well the model answers factual questions.

- Reasoning: The model's ability to answer questions that require reasoning.

- Misinformation/Bias: Assessment of the model's susceptibility to generating false or misleading information, and its neutrality.

Known limitations

The model is trained as an AI assistant for Arabic and English speakers. The model is limited to producing responses for queries in these two languages and may not produce appropriate responses to other language queries. By using JAIS, you acknowledge and accept that, as with any large language model, it may generate incorrect, misleading and/or offensive information or content. The content generated by JAIS is not intended as advice and should not be relied upon in any way, nor are we responsible for any of the content or consequences resulting from its use. We are continuously working to develop models with greater capabilities, and as such, welcome any feedback on the model.Acceptable use

Acceptable use policy

The provider has not supplied this information.Quality and performance evaluations

Source: Core42 Core42 conducted a comprehensive evaluation of Jais-30b-chat and benchmarked it against other leading base and instruction finetuned language models, focusing on both English and Arabic. Benchmarks used have a significant overlap with the widely used OpenLLM Leaderboard tasks. The evaluation criteria span various dimensions, including:- Knowledge: How well the model answers factual questions.

- Reasoning: The model's ability to answer questions that require reasoning.

- Misinformation/Bias: Assessment of the model's susceptibility to generating false or misleading information, and its neutrality.

Arabic Benchmark Results

| Models | Avg | EXAMS | MMLU (M) | LitQA | Hellaswag | PIQA | BoolQA | SituatedQA | ARC-C | OpenBookQA | TruthfulQA | CrowS-Pairs |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Jais-30b-chat | 51.3 | 40.7 | 35.1 | 57.1 | 59.3 | 64.1 | 81.6 | 52.9 | 39.1 | 29.6 | 53.1 | 52.5 |

| Jais-chat (13B) | 49.22 | 39.7 | 34 | 52.6 | 61.4 | 67.5 | 65.7 | 47 | 40.7 | 31.6 | 44.8 | 56.4 |

| acegpt-13b-chat | 45.94 | 38.6 | 31.2 | 42.3 | 49.2 | 60.2 | 69.7 | 39.5 | 35.1 | 35.4 | 48.2 | 55.9 |

| BLOOMz (7.1B) | 43.65 | 34.9 | 31 | 44 | 38.1 | 59.1 | 66.6 | 42.8 | 30.2 | 29.2 | 48.4 | 55.8 |

| acegpt-7b-chat | 43.36 | 37 | 29.6 | 39.4 | 46.1 | 58.9 | 55 | 38.8 | 33.1 | 34.6 | 50.1 | 54.4 |

| aya-101-13b-chat | 41.92 | 29.9 | 32.5 | 38.3 | 35.6 | 55.7 | 76.2 | 42.2 | 28.3 | 29.4 | 42.8 | 50.2 |

| mT0-XXL (13B) | 41.41 | 31.5 | 31.2 | 36.6 | 33.9 | 56.1 | 77.8 | 44.7 | 26.1 | 27.8 | 44.5 | 45.3 |

| LLama2-70b-chat | 39.4 | 29.7 | 29.3 | 33.7 | 34.3 | 52 | 67.3 | 36.4 | 26.4 | 28.4 | 46.3 | 49.6 |

| Llama2-13b-chat | 38.73 | 26.3 | 29.1 | 33.1 | 32 | 52.1 | 66 | 36.3 | 24.1 | 28.4 | 48.6 | 50 |

English Benchmark Results

| Models | Avg | MMLU | RACE | Hellaswag | PIQA | BoolQA | SituatedQA | ARC-C | OpenBookQA | Winogrande | TruthfulQA | CrowS-Pairs |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Jais-30b-chat | 59.59 | 36.5 | 45.6 | 78.9 | 73.1 | 90 | 56.7 | 51.2 | 44.4 | 70.2 | 42.3 | 66.6 |

| Jais-chat (13B) | 57.45 | 37.7 | 40.8 | 77.6 | 78.2 | 75.8 | 57.8 | 46.8 | 41 | 68.6 | 39.7 | 68 |

| acegpt-13b-chat | 57.84 | 34.4 | 42.7 | 76 | 78.8 | 81.9 | 45.4 | 45 | 41.6 | 71.3 | 45.7 | 73.4 |

| BLOOMz (7.1B) | 57.81 | 36.7 | 45.6 | 63.1 | 77.4 | 91.7 | 59.7 | 43.6 | 42 | 65.3 | 45.2 | 65.6 |

| acegpt-7b-chat | 54.25 | 30.9 | 40.1 | 67.6 | 75.4 | 75.3 | 44.2 | 38.8 | 39.6 | 66.3 | 49.3 | 69.3 |

| aya-101-13b-chat | 49.55 | 36.6 | 41.3 | 46 | 65.9 | 81.9 | 53.5 | 31.2 | 33 | 56.2 | 42.5 | 57 |

| mT0-XXL (13B) | 50.21 | 34 | 43.6 | 42.2 | 67.6 | 87.6 | 55.4 | 29.4 | 35.2 | 54.9 | 43.4 | 59 |

| LLama2-70b-chat | 61.25 | 43 | 45.2 | 80.3 | 80.6 | 86.5 | 46.5 | 49 | 43.8 | 74 | 52.8 | 72.1 |

| Llama2-13b-chat | 58.05 | 36.9 | 45.7 | 77.6 | 78.8 | 83 | 47.4 | 46 | 42.4 | 71 | 44.1 | 65.7 |

Cultural/ Local Context Knowledge

One of the key motivations to train an Arabic LLM is to include knowledge specific to the local context. In training Jais-30b-chat, we have invested considerable effort to include data that reflects high quality knowledge in both languages in the UAE and regional domains. To evaluate the impact of this training, in addition to LM harness evaluations in the general language domain, we also evaluate Jais models on a dataset testing knowledge pertaining to the UAE/regional domain. We curated ~320 UAE + Region specific factual questions in both English and Arabic. Each question has four answer choices, and like in the LM Harness, the task for the LLM is to choose the correct one. The following table shows Accuracy for both Arabic and English subsets of this test set.| Model | Arabic | English |

|---|---|---|

| Jais-30b-chat | 57.2 | 55 |

Long Context Evaluations

We adopted the needle-in-haystack approach to assess the model's capability of handling long contexts. In thisevaluation setup, we input a lengthy irrelevant text (the haystack) along with a required fact to answer a question (the

needle), which is embedded within this text. The model's task is to answer the question by locating and extracting the

needle from the text. We plot the accuracies of the model at retrieving the needle from the given context. We conducted evaluations for both Arabic and English languages. For brevity, we are presenting the plot for Arabic only. We observe that jais-30b-chat-v3 is improved over jais-30b-chat-v1 as it can answer the question upto 8k context

lengths.

Benchmarking methodology

Source: Core42 We adopted the needle-in-haystack approach to assess the model's capability of handling long contexts. In thisevaluation setup, we input a lengthy irrelevant text (the haystack) along with a required fact to answer a question (the

needle), which is embedded within this text. The model's task is to answer the question by locating and extracting the

needle from the text. We curated ~320 UAE + Region specific factual questions in both English and Arabic. Each question has four answer choices, and like in the LM Harness, the task for the LLM is to choose the correct one.

Public data summary

Source: Core42 The provider has not supplied this information.Model Specifications

Context Length8192

LicenseCustom

Training DataDecember 2022

Last UpdatedOctober 2025

Input TypeText

Output TypeText

ProviderCore42

Languages2 Languages