FLUX.1 Kontext [pro]

Version: 1

Direct from Azure models

Direct from Azure models are a select portfolio curated for their market-differentiated capabilities:- Secure and managed by Microsoft: Purchase and manage models directly through Azure with a single license, consistent support, and no third-party dependencies, backed by Azure's enterprise-grade infrastructure.

- Streamlined operations: Benefit from unified billing, governance, and seamless PTU portability across models hosted on Azure - all part of Microsoft Foundry.

- Future-ready flexibility: Access the latest models as they become available, and easily test, deploy, or switch between them within Microsoft Foundry; reducing integration effort.

- Cost control and optimization: Scale on demand with pay-as-you-go flexibility or reserve PTUs for predictable performance and savings.

Key capabilities

About this model

Generate and edit images through both text and image prompts. Flux.1 Kontext is a multimodal flow matching model that enables both text-to-image generation and in-context image editing. Modify images while maintaining character consistency and performing local edits up to 8x faster than other leading models.Key model capabilities

The model provides powerful text-to-image and image-editing capabalities:- Change existing images based on an edit instruction.

- Have character, style and object reference without any finetuning.

- Robust consistency allows users to refine an image through multiple successive edits with minimal visual drift.

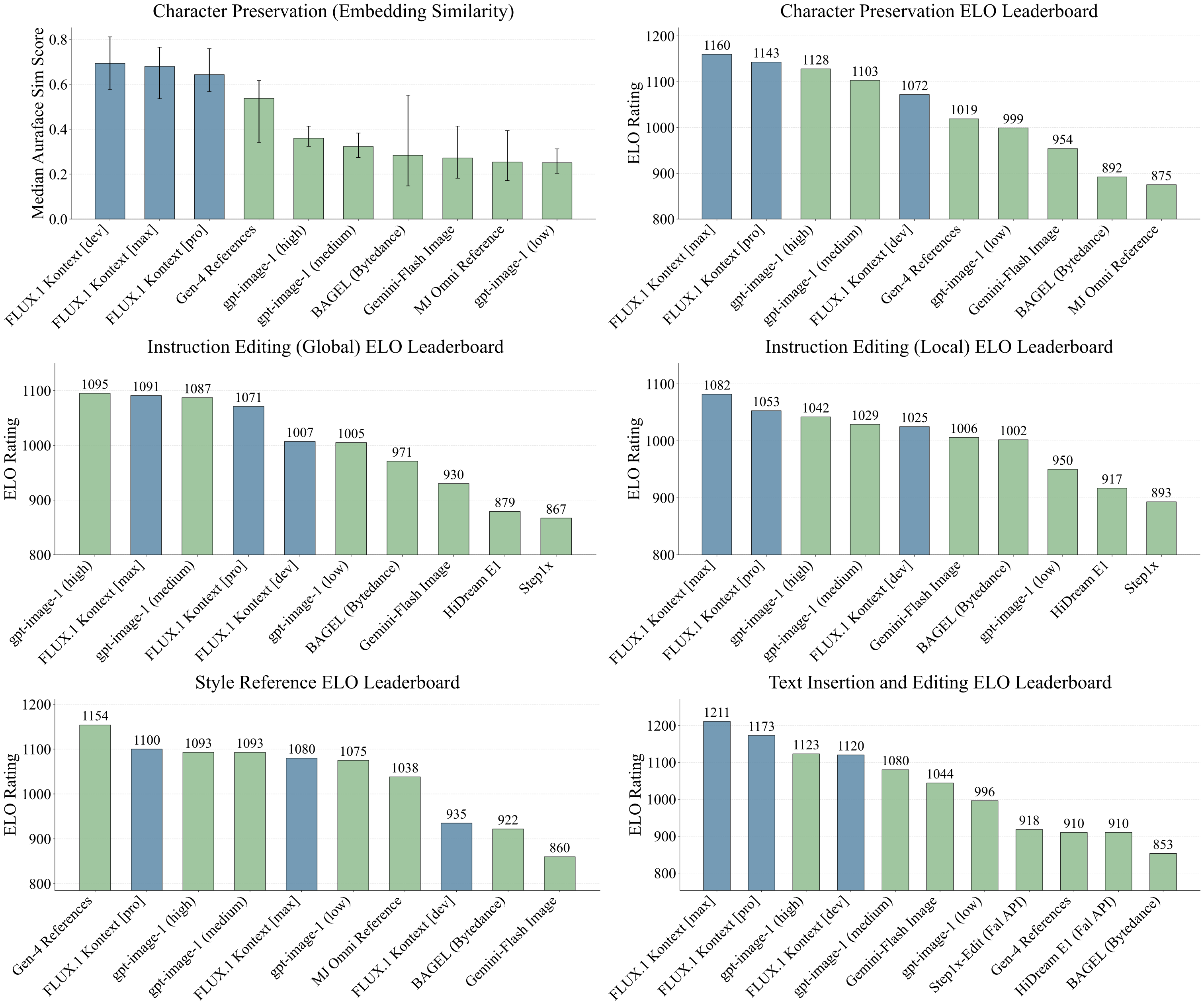

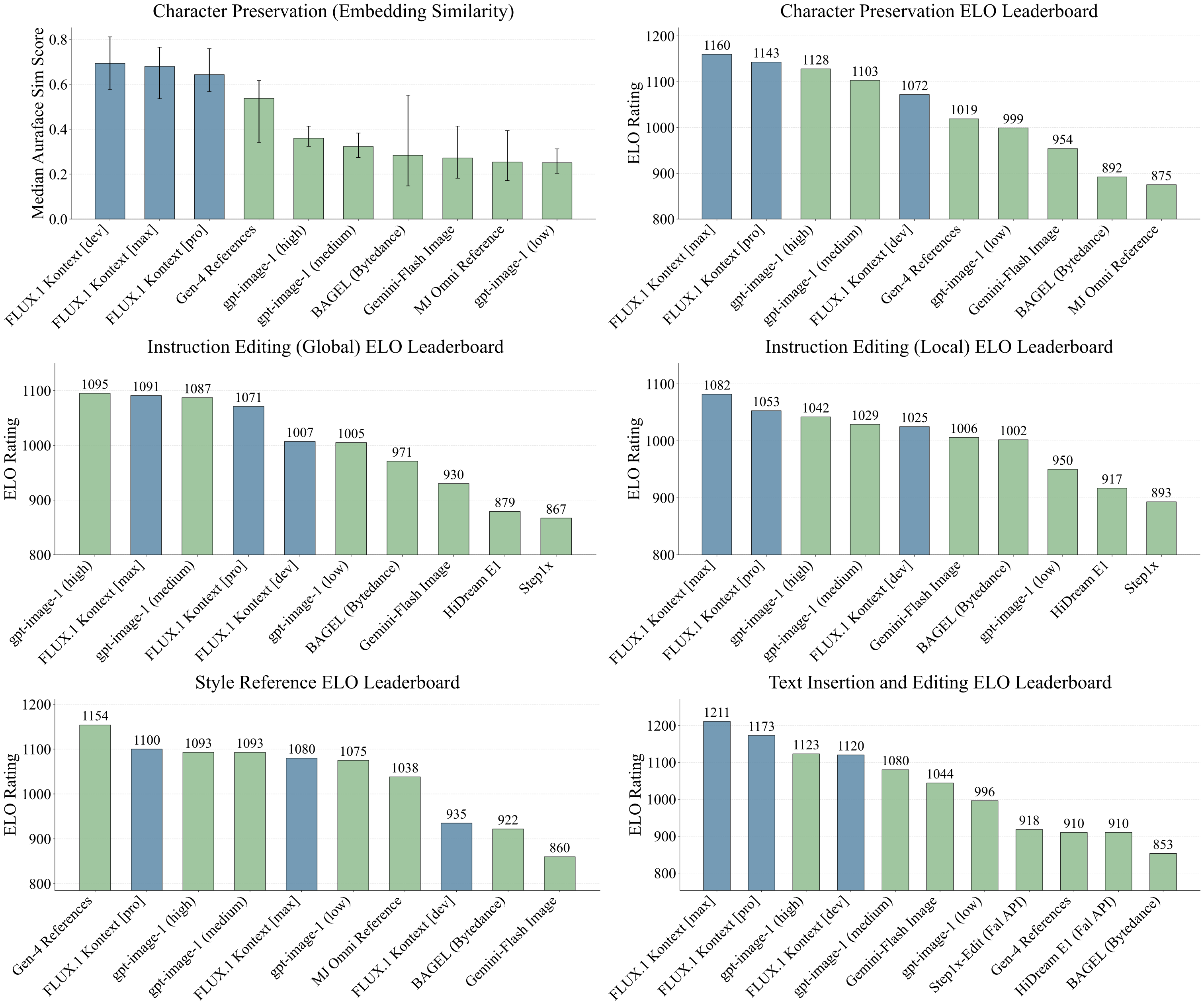

We show evaluation results across six in-context image generation tasks. FLUX.1 Kontext [pro] consistently ranks among the top performers across all tasks, achieving the highest scores in Text Editing and Character Preservation.

We show evaluation results across six in-context image generation tasks. FLUX.1 Kontext [pro] consistently ranks among the top performers across all tasks, achieving the highest scores in Text Editing and Character Preservation.

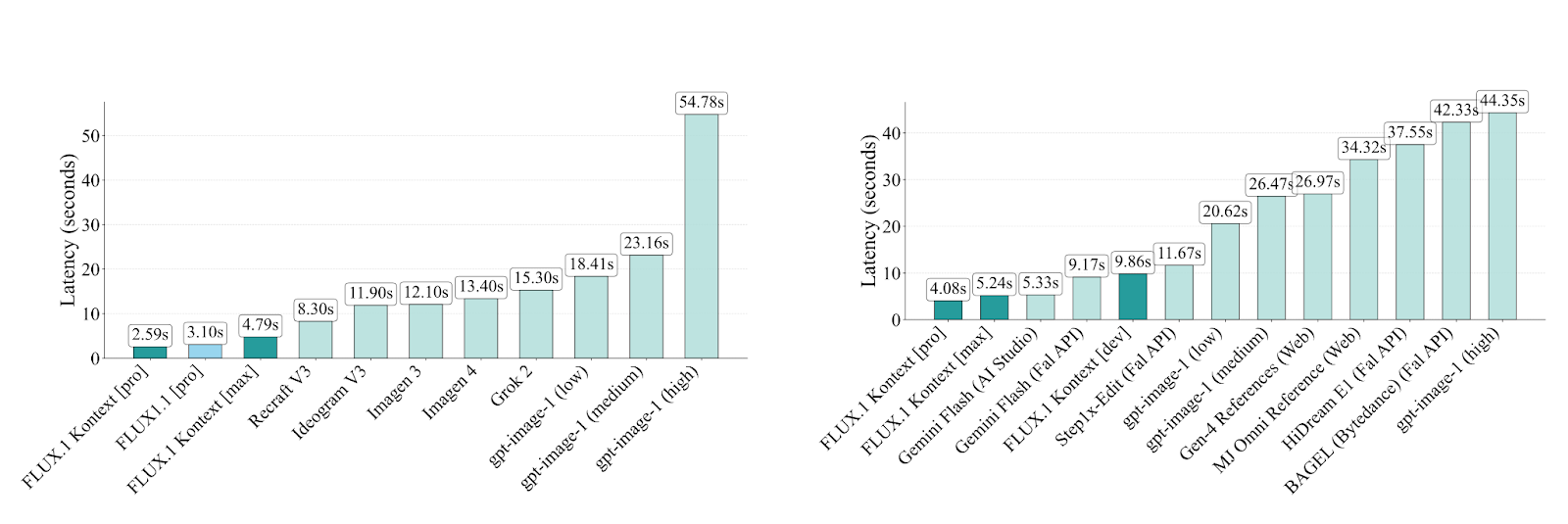

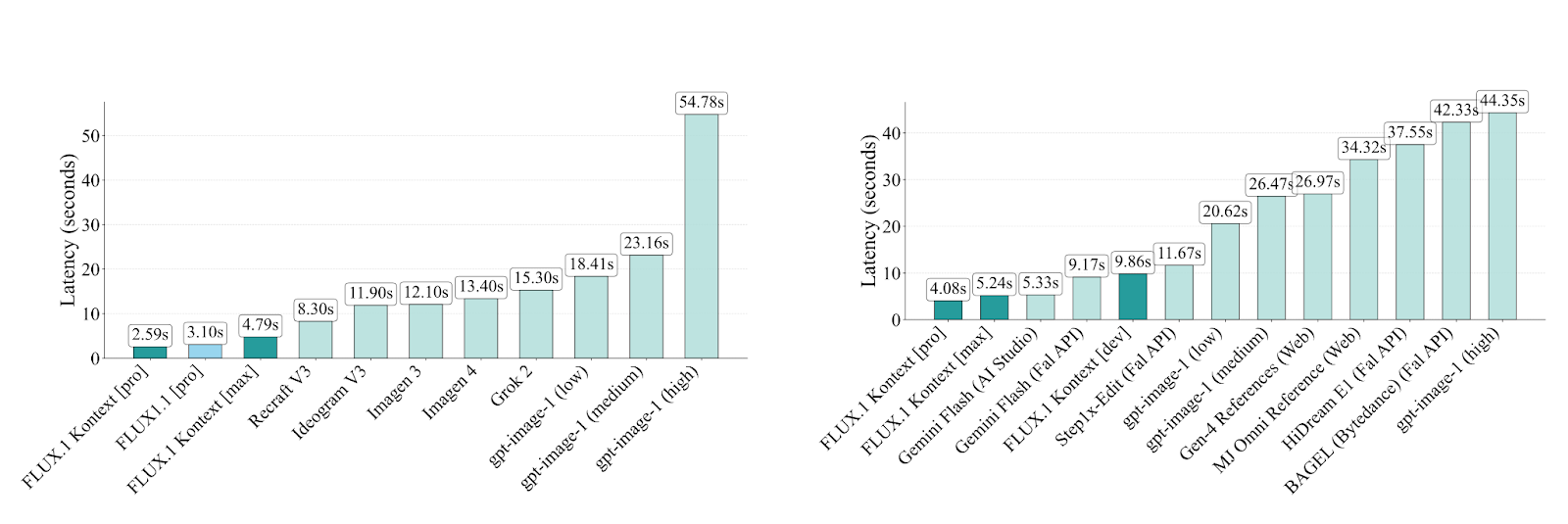

FLUX.1 Kontext models consistently achieve lower latencies than competing state-of-the-art models for both text-to-image generation (left) and image-editing (right)

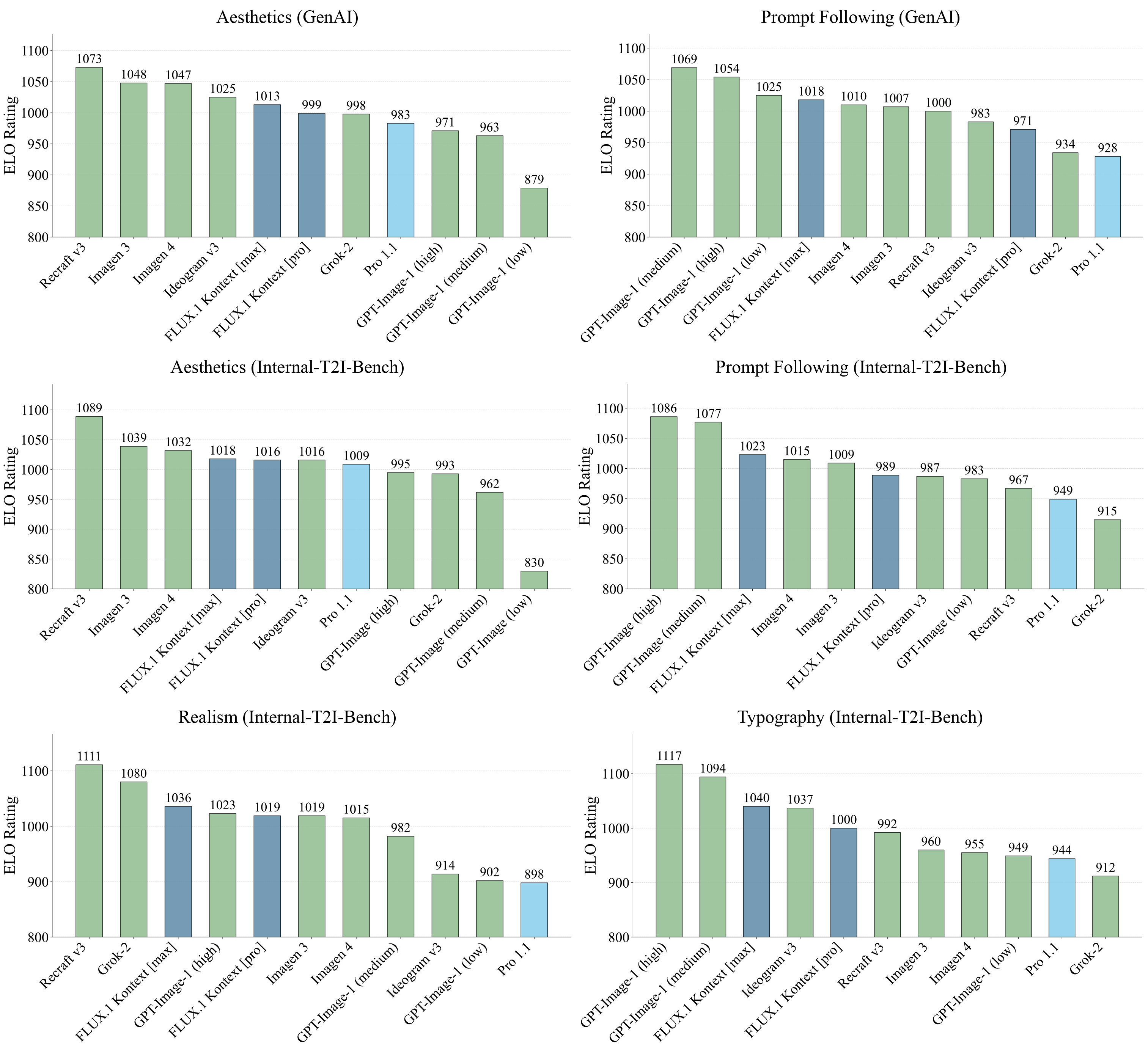

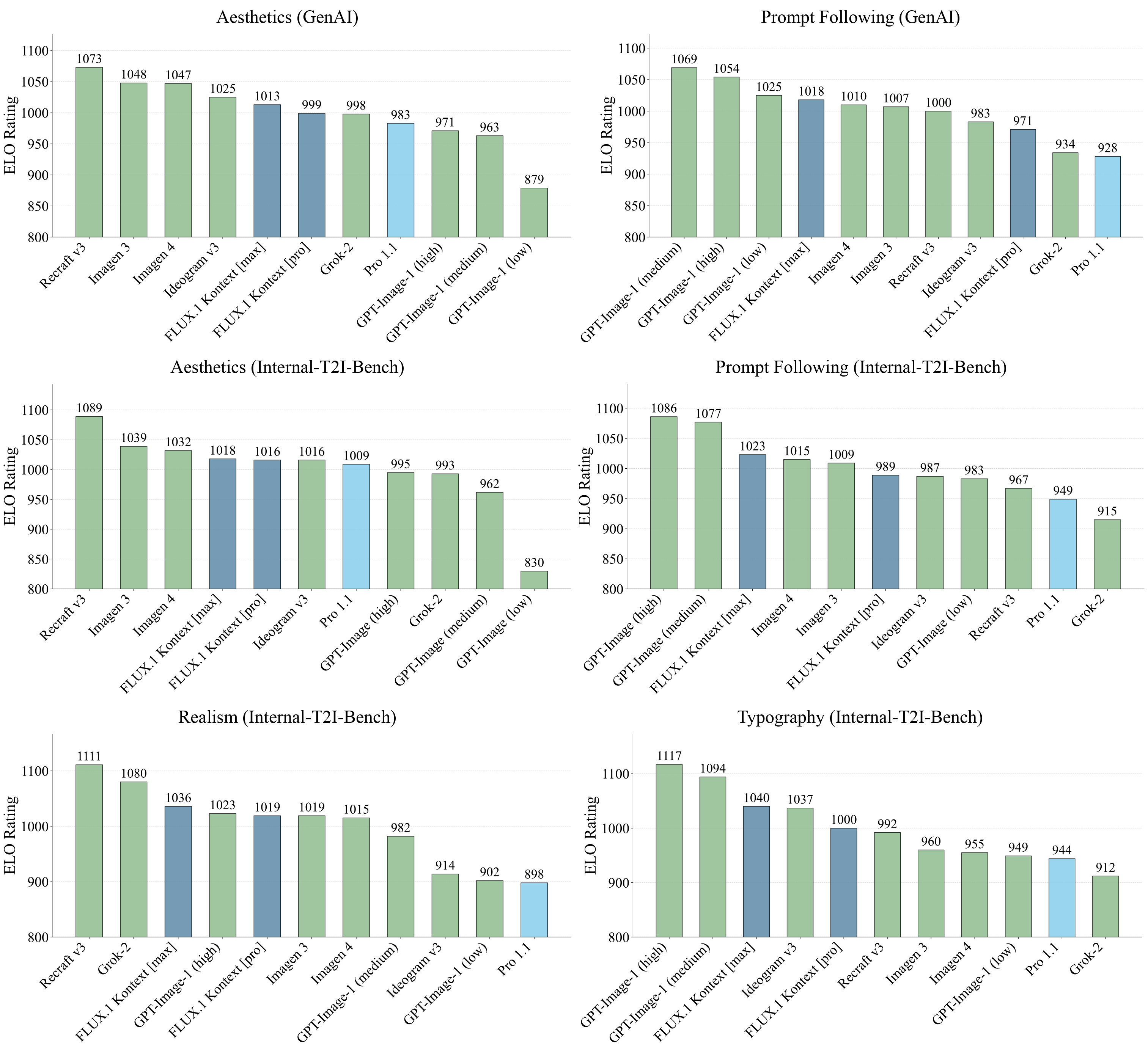

FLUX.1 Kontext models demonstrate competitive performance across aesthetics, prompt following, typography, and realism benchmarks.

FLUX.1 Kontext models consistently achieve lower latencies than competing state-of-the-art models for both text-to-image generation (left) and image-editing (right)

FLUX.1 Kontext models demonstrate competitive performance across aesthetics, prompt following, typography, and realism benchmarks.

left: input image; middle: edit from input: "tilt her head towards the camera", right: "make her laugh"

left: input image; middle: edit from input: "tilt her head towards the camera", right: "make her laugh"

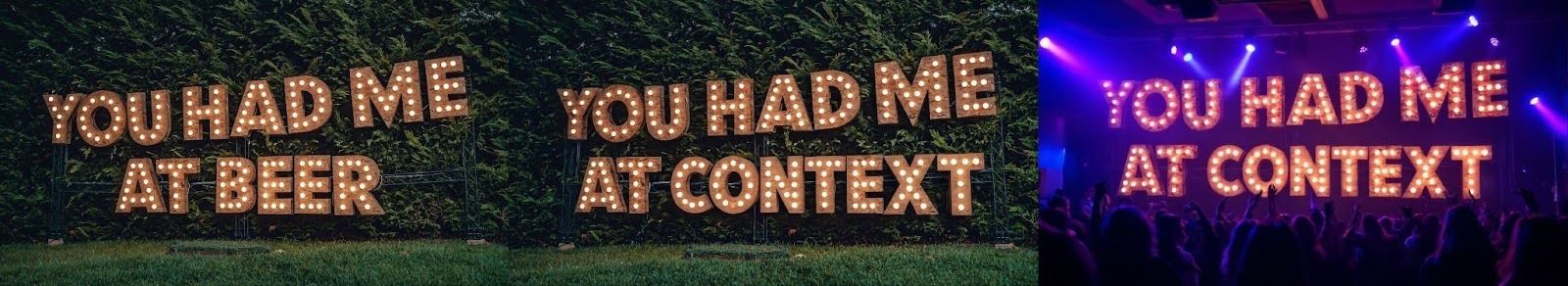

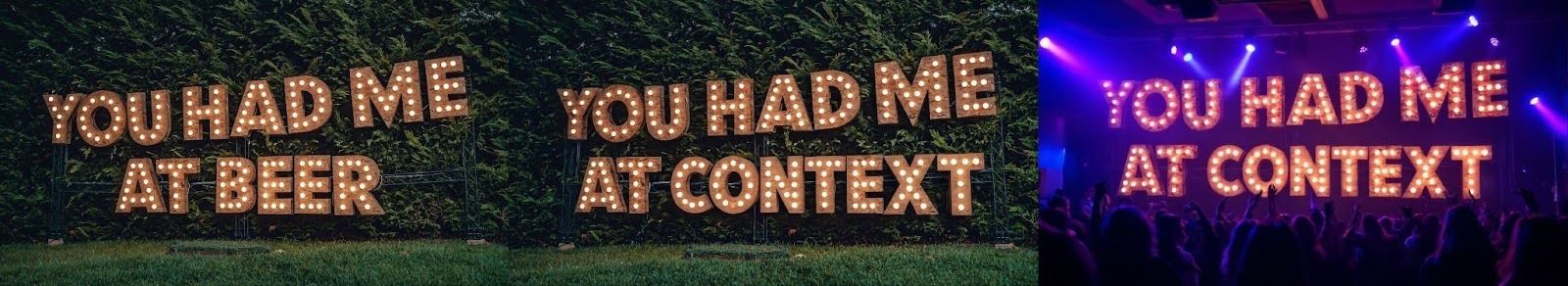

left: input image; middle: edit from input: "change the 'YOU HAD ME AT BEER' to 'YOU HAD ME AT CONTEXT'", right: "change the setting to a night club"

FLUX.1 Kontext exhibits some limitations in its current implementation. Excessive multi-turn editing sessions can introduce visual artifacts that degrade image quality. The model occasionally fails to follow instructions accurately, ignoring specific prompt requirements in rare cases. World knowledge remains limited, affecting the model's ability to generate contextually accurate content. Additionally, the distillation process can introduce visual artifacts that impact output fidelity.

left: input image; middle: edit from input: "change the 'YOU HAD ME AT BEER' to 'YOU HAD ME AT CONTEXT'", right: "change the setting to a night club"

FLUX.1 Kontext exhibits some limitations in its current implementation. Excessive multi-turn editing sessions can introduce visual artifacts that degrade image quality. The model occasionally fails to follow instructions accurately, ignoring specific prompt requirements in rare cases. World knowledge remains limited, affecting the model's ability to generate contextually accurate content. Additionally, the distillation process can introduce visual artifacts that impact output fidelity.

Illustration of a FLUX.1 Kontext failure case: After six iterative edits, the generation is visually degraded and contains visible artifacts.

Illustration of a FLUX.1 Kontext failure case: After six iterative edits, the generation is visually degraded and contains visible artifacts.

Use cases

See Responsible AI for additional considerations for responsible use.Key use cases

The model is intendended for broad commercial and research use. The model provides powerful text-to-image and image-editing capabalities:- Change existing images based on an edit instruction.

- Have character, style and object reference without any finetuning.

- Robust consistency allows users to refine an image through multiple successive edits with minimal visual drift.

Out of scope use cases

The provider has not supplied this information.Pricing

Pricing is based on a number of factors, including deployment type and tokens used. See pricing details here.Technical specs

The provider has not supplied this information.Training cut-off date

The provider has not supplied this information.Training time

The provider has not supplied this information.Input formats

The provider has not supplied this information.Output formats

The provider has not supplied this information.Supported languages

EnglishSample JSON response

The provider has not supplied this information.Model architecture

Flux.1 Kontext is a multimodal flow matching model.Long context

The provider has not supplied this information.Optimizing model performance

The provider has not supplied this information.Additional assets

For more information, please read our blog post and our technical report . You can find information about the [pro] version in here. To validate the performance of our FLUX.1 Kontext models we conducted an extensive performance evaluation that we release in a tech report . The benchmark can be found here .Training disclosure

Training, testing and validation

We filtered pre-training data for multiple categories of "not safe for work" (NSFW) content to help prevent a user generating unlawful content in response to text prompts or uploaded images. We have partnered with the Internet Watch Foundation, an independent nonprofit organization dedicated to preventing online abuse, to filter known child sexual abuse material (CSAM) from post-training data. Subsequently, we undertook multiple rounds of targeted fine-tuning to provide additional mitigation against potential abuse.Distribution

Distribution channels

The provider has not supplied this information.More information

Model developer: Black Forest Labs Model Release Date: June 30, 2025 Black Forest Labs is committed to the responsible development of generative AI technology. Prior to releasing FLUX.1 Kontext, we evaluated and mitigated a number of risks in our models and services, including the generation of unlawful content. We implemented a series of pre-release mitigations to help prevent misuse by third parties, with additional post-release mitigations to help address residual risks:- Pre-training mitigation. We filtered pre-training data for multiple categories of "not safe for work" (NSFW) content to help prevent a user generating unlawful content in response to text prompts or uploaded images.

- Post-training mitigation. We have partnered with the Internet Watch Foundation, an independent nonprofit organization dedicated to preventing online abuse, to filter known child sexual abuse material (CSAM) from post-training data. Subsequently, we undertook multiple rounds of targeted fine-tuning to provide additional mitigation against potential abuse. By inhibiting certain behaviors and concepts in the trained model, these techniques can help to prevent a user generating synthetic CSAM or nonconsensual intimate imagery (NCII) from a text prompt, or transforming an uploaded image into synthetic CSAM or NCII.

- Pre-release evaluation. Throughout this process, we conducted multiple internal and external third-party evaluations of model checkpoints to identify further opportunities for improvement. The third-party evaluations—which included 21 checkpoints of FLUX.1 Kontext [pro] focused on eliciting CSAM and NCII through adversarial testing with text-only prompts, as well as uploaded images with text prompts. Next, we conducted a final third-party evaluation of the proposed release checkpoints, focused on text-to-image and image-to-image CSAM and NCII generation. The final FLUX.1 Kontext [pro] demonstrated very high resilience against violative inputs. Based on these findings, we approved the release of the FLUX.1 Kontext [pro] model via API.

Responsible AI considerations

Safety techniques

Black Forest Labs is committed to the responsible development of generative AI technology. Prior to releasing FLUX.1 Kontext, we evaluated and mitigated a number of risks in our models and services, including the generation of unlawful content. We implemented a series of pre-release mitigations to help prevent misuse by third parties, with additional post-release mitigations to help address residual risks:- Pre-training mitigation. We filtered pre-training data for multiple categories of "not safe for work" (NSFW) content to help prevent a user generating unlawful content in response to text prompts or uploaded images.

- Post-training mitigation. We have partnered with the Internet Watch Foundation, an independent nonprofit organization dedicated to preventing online abuse, to filter known child sexual abuse material (CSAM) from post-training data. Subsequently, we undertook multiple rounds of targeted fine-tuning to provide additional mitigation against potential abuse. By inhibiting certain behaviors and concepts in the trained model, these techniques can help to prevent a user generating synthetic CSAM or nonconsensual intimate imagery (NCII) from a text prompt, or transforming an uploaded image into synthetic CSAM or NCII.

Safety evaluations

Throughout this process, we conducted multiple internal and external third-party evaluations of model checkpoints to identify further opportunities for improvement. The third-party evaluations—which included 21 checkpoints of FLUX.1 Kontext [pro] focused on eliciting CSAM and NCII through adversarial testing with text-only prompts, as well as uploaded images with text prompts. Next, we conducted a final third-party evaluation of the proposed release checkpoints, focused on text-to-image and image-to-image CSAM and NCII generation. The final FLUX.1 Kontext [pro] demonstrated very high resilience against violative inputs. Based on these findings, we approved the release of the FLUX.1 Kontext [pro] model via API.Known limitations

FLUX.1 Kontext exhibits some limitations in its current implementation. Excessive multi-turn editing sessions can introduce visual artifacts that degrade image quality. The model occasionally fails to follow instructions accurately, ignoring specific prompt requirements in rare cases. World knowledge remains limited, affecting the model's ability to generate contextually accurate content. Additionally, the distillation process can introduce visual artifacts that impact output fidelity.

Illustration of a FLUX.1 Kontext failure case: After six iterative edits, the generation is visually degraded and contains visible artifacts.

Acceptable use

Acceptable use policy

The provider has not supplied this information.Quality and performance evaluations

Source: Black Forest Labs To validate the performance of our FLUX.1 Kontext models we conducted an extensive performance evaluation that we release in a tech report . Here we give a short summary: to evaluate our models, we compile KontextBench, a benchmark for text-to-image generation and image-to-image generation from crowd-sourced real-world use cases. The benchmark can be found here . We show evaluation results across six in-context image generation tasks. FLUX.1 Kontext [pro] consistently ranks among the top performers across all tasks, achieving the highest scores in Text Editing and Character Preservation.

We evaluate image-to-image models, including our FLUX.1 Kontext models across six KontextBench tasks. FLUX.1 Kontext [pro] consistently ranks among the top performers across all tasks, achieving the highest scores in text editing and character preservation (see Figure above) while consistently outperforming competing state-of-the-art models in inference speed (see Figure below)

We show evaluation results across six in-context image generation tasks. FLUX.1 Kontext [pro] consistently ranks among the top performers across all tasks, achieving the highest scores in Text Editing and Character Preservation.

We evaluate image-to-image models, including our FLUX.1 Kontext models across six KontextBench tasks. FLUX.1 Kontext [pro] consistently ranks among the top performers across all tasks, achieving the highest scores in text editing and character preservation (see Figure above) while consistently outperforming competing state-of-the-art models in inference speed (see Figure below)

FLUX.1 Kontext models consistently achieve lower latencies than competing state-of-the-art models for both text-to-image generation (left) and image-editing (right) We evaluate FLUX.1 Kontext on text-to-image benchmarks across multiple quality dimensions. FLUX.1 Kontext models demonstrate competitive performance across aesthetics, prompt following, typography, and realism benchmarks.

left: input image; middle: edit from input: "tilt her head towards the camera", right: "make her laugh"

left: input image; middle: edit from input: "change the 'YOU HAD ME AT BEER' to 'YOU HAD ME AT CONTEXT'", right: "change the setting to a night club" FLUX.1 Kontext exhibits some limitations in its current implementation. Excessive multi-turn editing sessions can introduce visual artifacts that degrade image quality. The model occasionally fails to follow instructions accurately, ignoring specific prompt requirements in rare cases. World knowledge remains limited, affecting the model's ability to generate contextually accurate content. Additionally, the distillation process can introduce visual artifacts that impact output fidelity.

Illustration of a FLUX.1 Kontext failure case: After six iterative edits, the generation is visually degraded and contains visible artifacts. Throughout this process, we conducted multiple internal and external third-party evaluations of model checkpoints to identify further opportunities for improvement. The third-party evaluations—which included 21 checkpoints of FLUX.1 Kontext [pro] focused on eliciting CSAM and NCII through adversarial testing with text-only prompts, as well as uploaded images with text prompts. Next, we conducted a final third-party evaluation of the proposed release checkpoints, focused on text-to-image and image-to-image CSAM and NCII generation. The final FLUX.1 Kontext [pro] demonstrated very high resilience against violative inputs. Based on these findings, we approved the release of the FLUX.1 Kontext [pro] model via API.

Benchmarking methodology

Source: Black Forest Labs The provider has not supplied this information.Public data summary

Source: Black Forest Labs The provider has not supplied this information.Model Specifications

Context Length131072

LicenseCustom

Last UpdatedNovember 2025

Input TypeText,Image

Output TypeImage

ProviderBlack Forest Labs

Languages1 Language